We all have our ways of sharing thoughts and feelings—from speech, using sign language, to even writing down notes or making gestures. But imagine if all these paths were suddenly out of reach? That's the reality for people with Locked-in Syndrome (LIS), who find themselves fully aware and capable of understanding everything around them but unable to move or speak due to almost complete paralysis. The only way they can express themselves is through eye movement.

Inspired by their resilience, we set out to turn those eye movements into a powerful means of communication. That's how GazeAviator was born. It's a special kind of graphical user interface (GUI) that lets eye movements take the control, turning a simple look and blink into commands. We started with controlling drones—because, let's face it, drones are cool and offer a wide range of movements like flying up, down, or left and right. But the beauty of GazeAviator is that it's not just about drones. It can be customized to help control wheelchairs or send specific messages and requests, giving a voice to those who thought they'd lost it.

The GUI is straightforward: it displays commands like lifting, landing, and steering, and all users need to do is gaze at the command they want and blink. It's like having a conversation without saying a word, using just your eyes to speak loud and clear.

Eye Tracking:

In eye tracking, Video Oculography (VOG) and Electrooculography (EOG) are two notable methods. VOG tracks eye movements with video technology, requiring good lighting for optimal performance. However, VOG equipment tends to be costly. EOG, in contrast, detects the subtle electric signals generated by eye movements. It's not only cost-effective but also versatile, functioning well in any lighting condition. EOG's cost-effectiveness and versatility in any lighting make it the ideal choice for our project's foundation.

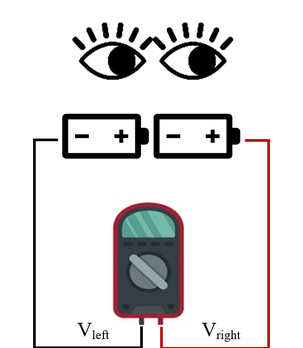

EOG operates on the principle of the electrical potential difference between the cornea, which acts as the positive pole, and the retina, the negative pole. This creates a corneoretinal potential, turning the eye into an effective electric dipole (Fig. 1).

Fig 1- The electrical potential between the cornea and the retina of human eye (EOG).

As the eye shifts position, this dipole's orientation changes, altering the potential difference detectable at the skin's surface with strategically placed electrodes. For instance, moving the eyes left increases the potential at the left eye's outer corner and decreases it at the right's, leading to a negative potential difference. Conversely, looking right reverses this effect, creating a positive potential difference. When the eyes are stationary and facing forward, the potential measured by the electrodes fluctuate around zero, providing a baseline for eye movement detection (Fig. 2).

Left Gaze: V left > V right

Front Gaze: V right = V left

Right Gaze: V right > V left

Fig 2. EOG principle

Hardware Setup

In this project, silver (Ag) electrodes are used to measure EOG signals. To measure vertical eye movements, electrodes are attached to above and below of one eye. A reference electrode is affixed behind the ear To capture the horizontal movements, two electrodes are placed approximately 1cm from the outer canthus of each eye.

The signals from the electrodes are then channeled into a "BioAmp EXG Pill" analog-front-end (AFE) biopotential signal-acquisition board. The board's purpose is to amplify the raw EOG signals to a level suitable for effective digitization and processing. Given the weak nature of the signals emitted by the human eye, such amplification is a vital step in securing accurate and reliable data acquisition.

This module incorporates a Driven Right Leg (DRL) circuit to mitigate common-mode noise, which can result from a variety of sources. The DRL circuit functions by first identifying the common-mode voltage present in the EOG signal inputs to the differential amplifier. It then inverts this voltage and reintroduces it to the body through the "right leg" electrode. This action effectively diminishes the common-mode voltage that the amplifier detects, thereby reducing the impact of noise on the EOG signal. Additionally, the board is equipped with an amplifier and a bandpass filter with an inherent differentiator which makes the analog output signal (0 to 5v) appear in the form of spikes. (Soldering iron, soldering wire are required to solder the header pins to the module.)

Following signal amplification and filtering, the analog signal needs to be digitized for processing within the computer. This is achieved by first passing the analog signal to the Analog-to-Digital Converter (ADC) port of the Arduino Uno (vertical and horizontal EOG connected to Pin A0 and A1). This ADC has a resolution of 10 bits, meaning the analog signal, which ranges from 0-5 volts, is scaled to a digital value ranging from 0-1023.

Once digitized, the signal is then transmitted to the NVIDIA Jetson Orin Nano development kit via USB serial communication at a baud rate of 9600 bits/sec. NVIDIA Jetson Orin Nano development kit connects to the Tello DJI drone over a Wi-Fi connection, providing real-time control based on the user's eye movements. The Full Schematic is shown in Fig. 3.

AI Pipeline

The goal of this project is to enable eye-controlled drone navigation via a graphical user interface (GUI). We envisioned a GUI equipped with buttons that could interpret the user's gaze shifting across the screen, visually indicating gaze location with a circle. More specifically, when a user's gaze remains fixed on a button and is followed by a blink, the GUI sends a corresponding command to the drone.

Taking the sequential nature of EOG data into account guides us to employ a Long Short-Term Memory (LSTM) model. We train this model to convert sequences of EOG signals into screen coordinates, effectively mapping eye movements to specific pixels on the monitor. The first essential step in this approach is collecting dataset of EOG signals for the LSTM model's training. Once the dataset of EOG signals is collected, the next step is training the LSTM model with this data. After training, the model is ready for deployment in our application, where it interprets eye movements in real-time to control the GUI, seamlessly translating gaze into precise commands for interaction.